The One Where Joey Speaks French

Stop AI hallucinations before they wreck your credibility

You know that Friends episode where Joey insists he speaks French?

"Je de coup fluh… blu blah." Joey stares proudly. He thinks he's speaking fluent French.

Phoebe stares back.

"That was actually worse than the first one."

He tries again, with even more confidence.

"Fleurblaaah bleeuh bluh!"

Phoebe breaks.

"You're not speaking French, Joey!"

"He's not… he's just doing gibberish! I'm so sorry."

That's exactly what AI sounds like when it hallucinates… confident, fluent-sounding nonsense that almost passes UNTIL someone asks where the quote came from.

This issue is about spotting that gibberish before it ends up in your board deck, pitch doc, or public-facing content.

I've been using AI more and more for strategic content, competitive research, and framework development. And I've learned something important: AI can be as confident as Joey when it's making stuff up.

Why This Actually Matters

If you've ever:

Pasted an AI-generated stat into a board slide

Let a first-draft blog post go straight to design

Shared a "hot take" without checking the source

...then you've risked an AI hallucination slipping into the wild.

Here's the thing: fluency isn't the same as accuracy. And busy teams miss that difference when we're operating under deadline pressure.

I've caught myself almost shipping content that sounded brilliant but was completely fabricated. The formatting was perfect. The logic flowed beautifully. The only problem? None of it was true.

Where I've Seen This Go Wrong

The danger zones in my own work:

Executive decks – AI generates market-size numbers that sound credible but have no source

Competitive analysis – Made-up customer logos or funding details

Thought leadership – Citations to research studies that don't exist

Framework development – Brand new "methodologies" that sound official but are invented

Look at every place you rely on AI for first drafts with light human review. That's where hallucinations breed.

The Two-Step Process I Actually Use

I've started building fact-checking directly into my AI workflow instead of hoping I'll catch problems later.

Step 1: Make AI critique itself

Before I review any AI draft, I run this:

Draft: Answer the question "[your original prompt]"

Critique: Identify any flaws, gaps, or logical errors

Revise: Produce a final answer that fixes every issue you foundThe model writes, becomes its own critic, then rewrites. I start my review with cleaner copy and a list of weak spots it already identified.

Step 2: Fact-check every external claim

For anything that references outside sources or data, I use this two-part check:

Challenge claims:

You are a fact-checker. List every claim, statistic, and named source in the draft below.

Flag anything without a verifiable citation and explain why it's suspect.Demand receipts:

Provide primary sources (public URLs, published studies, or official filings)

that confirm or correct: "[insert specific statement]".

If none exist, return "No verifiable source found".I've wired these prompts into my content pipeline so screening happens by default, not at the last minute when I'm rushing to hit send.

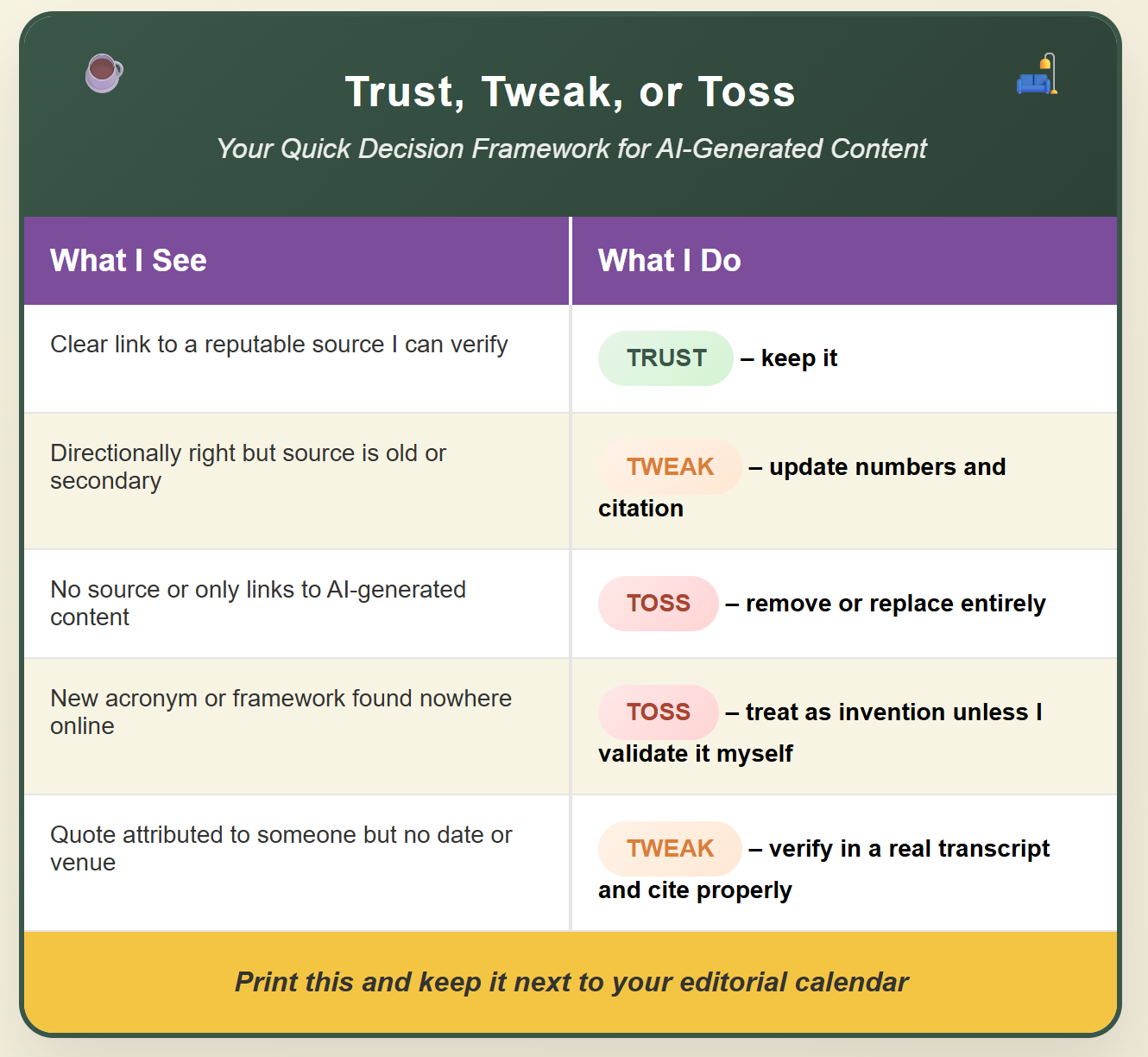

My Quick Decision Framework

When I'm reviewing AI outputs, I use this simple filter:

I keep this taped next to my monitor right next to the “Can AI help you with this task?” post-it note. It makes decisions fast.

A Real Example From Last Week

I was working on a social media post and AI generated this line:

"B2B marketers who shifted 15% of budget to AI automation saw a 3.4× pipeline lift, according to Forrester, April 2025."

Sounds reasonable, right? The kind of stat you see in every AI marketing presentation.

When I ran it through my fact-check process, the result came back: "No verifiable source found."

I spent five minutes trying to find that Forrester report. It doesn't exist.

Replaced it with actual data from our own client results. Much more credible. Much more defensible.

What I'm Learning

AI is getting scary good at sounding authoritative about things that aren't true. Not because it's trying to deceive, but because it's optimized for fluency, not accuracy.

The solution isn't to stop using AI. It's to get better at using it intelligently.

I'm treating AI like a very talented intern who occasionally embellishes. Great at structure, synthesis, and getting ideas flowing. Terrible at distinguishing between "sounds right" and "is right."

My job as the CMO is to harness the brilliance while protecting against the blind spots.

Because the last thing I want is to walk into a board meeting speaking Joey's version of French.

Next Week

I'm diving into the most awkward dynamic happening in marketing teams right now.

You know that Friends episode where Monica and Chandler think they're secretly dating, but everyone already knows? And then it becomes this weird game of "They don't know that we know they know we know"?

That's exactly what's happening with AI adoption in most organizations.

Leadership thinks teams aren't using AI yet or not using it for advanced tasks and workflows. Teams think leadership doesn't want them using AI. Meanwhile, everyone's secretly using it, pretending they're not, and nobody's talking about what's actually working.

Working title: The One Where They Don't Know We Know They Know We Know.

I'll break down why this "secret AI" dynamic is killing innovation, how it's wasting time and money, and what happens when everyone finally stops pretending.

Au Revoir,

---Lisa

P.S. If this helped you dodge a potential hallucination, forward it to another marketer who needs the same heads-up.